Abstract

Modern computation based on von Neumann architecture is now a mature cutting-edge science. In the von Neumann architecture, processing and memory units are implemented as separate blocks interchanging data intensively and continuously. This data transfer is responsible for a large part of the power consumption. The next generation computer technology is expected to solve problems at the exascale with 1018 calculations each second. Even though these future computers will be incredibly powerful, if they are based on von Neumann type architectures, they will consume between 20 and 30 megawatts of power and will not have intrinsic physically built-in capabilities to learn or deal with complex data as our brain does. These needs can be addressed by neuromorphic computing systems which are inspired by the biological concepts of the human brain. This new generation of computers has the potential to be used for the storage and processing of large amounts of digital information with much lower power consumption than conventional processors. Among their potential future applications, an important niche is moving the control from data centers to edge devices. The aim of this roadmap is to present a snapshot of the present state of neuromorphic technology and provide an opinion on the challenges and opportunities that the future holds in the major areas of neuromorphic technology, namely materials, devices, neuromorphic circuits, neuromorphic algorithms, applications, and ethics. The roadmap is a collection of perspectives where leading researchers in the neuromorphic community provide their own view about the current state and the future challenges for each research area. We hope that this roadmap will be a useful resource by providing a concise yet comprehensive introduction to readers outside this field, for those who are just entering the field, as well as providing future perspectives for those who are well established in the neuromorphic computing community.

Export citation and abstract BibTeX RIS

Introduction

Nini Pryds1, Dennis Valbjørn Christensen1, Bernabe Linares-Barranco2, Daniele Ielmini3 and Regina Dittmann4

1Technical University of Denmark

2Instituto de Microelectrónica de Sevilla, CSIC and University of Seville

3Politecnico di Milano and IU.NET

4Forschungszentrum Jülich GmbH

Computers have become essential to all aspects of modern life and are omnipresent all over the globe. Today, data-intensive applications have placed a high demand on hardware performance, in terms of short access latency, high capacity, large bandwidth, low cost, and ability to execute artificial intelligence (AI) tasks. However, the ever-growing pressure for big data creates additional challenges: on the one hand, energy consumption has become a remarkable challenge, due to the rapid development of sophisticated algorithms and architectures. Currently, about 5%–15% of the world's energy is spent in some form of data manipulation, such as transmission or processing [1], and this fraction is expected to rapidly increase due to the exponential increase of data generated by ubiquitous sensors in the era of internet of things. On the other hand, data processing is increasingly limited by the memory bandwidth due to the von-Neumann's architecture with physical separation between processing and memory units. While the von Neumann computer architecture has made an incredible contribution to the world of science and technology for decades, its performance is largely inefficient due to the relatively slow and energy demanding data movement.

Conventional von Neumann computers based on complementary metal oxide semiconductor (CMOS) technology do not possess the intrinsic capabilities to learn or deal with complex data as the human brain does. To address the limits of digital computers, there are significant research efforts worldwide in developing profoundly different approaches inspired by biological principles. One of these approaches is the development of neuromorphic systems, namely computing systems mimicking the type of information processing in the human brain.

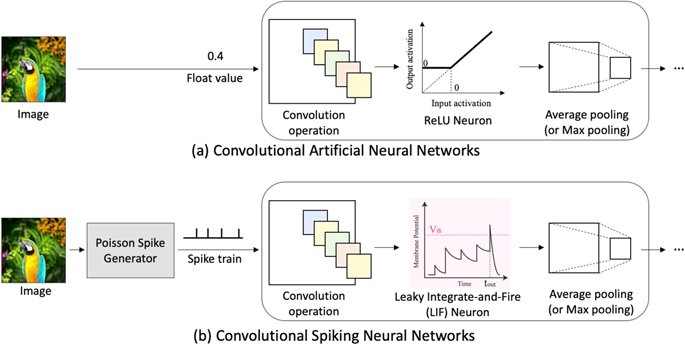

The term 'neuromorphic' was originally coined in the 1990s by Carver Mead to refer to mixed signal analog/digital very large scale integration computing systems that take inspiration from the neuro-biological architectures of the brain [2]. 'Neuromorphic engineering' emerged as an interdisciplinary research field that focused on building electronic neural processing systems to directly 'emulate' the bio-physics of real neurons and synapses [3]. More recently, the definition of the term neuromorphic has been extended in two additional directions [4]. Firstly, the term neuromorphic was used to describe spike-based processing systems engineered to explore large-scale computational neuroscience models. Secondly, neuromorphic computing comprises dedicated electronic neural architectures that implement neuron and synapse circuits. Note that this concept is distinct from AI machine learning approaches which are based on pure software algorithms developed to minimize the recognition error in pattern recognition tasks [5]. However, a precise definition of neuromorphic computing is somewhat debated. It can range from very strict high-fidelity mimicking of neuroscience principles where very detailed synaptic chemical dynamics are mandatory, to very vague high-level loosely brain-inspired principles, such as the simple vector (input) times matrix (synapses) multiplication. In general, as of today, there is a wide consensus that neuromorphic computing should at least encompass some time-, event-, or data-driven computation. In this sense, systems like spiking neural networks (SNN), sometimes referred to as the third generation of neural networks [6], are strongly representative. However, there is an important cross-fertilization between the technologies required to develop efficient SNNs and those for more traditional non-SNN, referred to as artificial neural networks (ANN), which are typically more time-step-driven. While the former definition of neuromorphic computing is more plausible, in this roadmap we aim at broadening the scope to emphasize the cross-fertilization between ANN and SNN.

Nature is a vital inspiration for the advancement to a more sustainable computing scenario, where neuromorphic systems display much lower power consumption than conventional processors, due to the integration of non-volatile memory and analog/digital processing circuits as well as the dynamic learning capabilities in the context of complex data. Building ANNs that mimic a biological counterpart is one of the remaining challenges in computing. If the fundamental technical issues are solved in the next few years, the neuromorphic computing market is projected to rise from $0.2 billion in 2025 to $22 billion in 2035 [7] as neuromorphic computers with ultra-low power consumption and high speed advance and drive demands for neuromorphic devices.

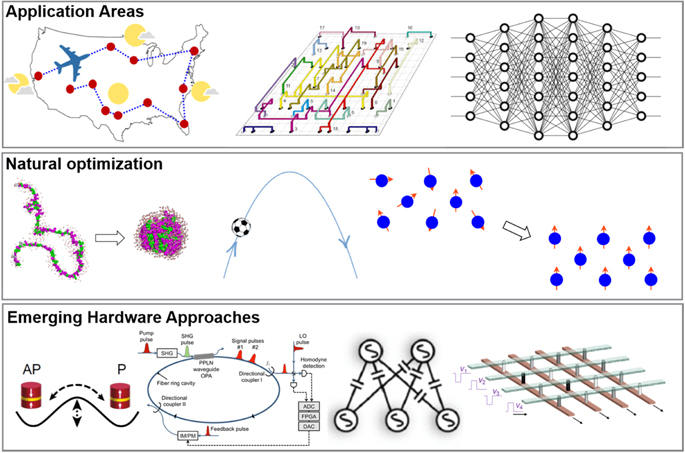

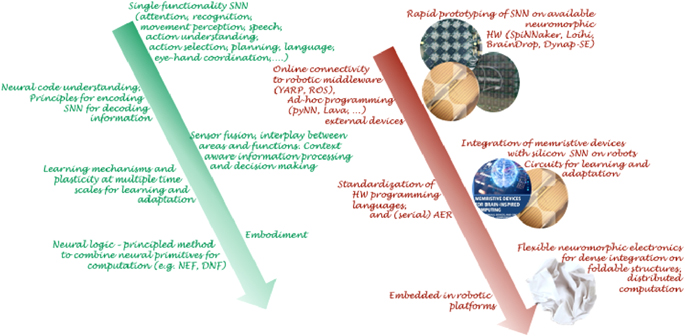

In line with these increasingly pressing issues, the general aim of the roadmap on neuromorphic computing and engineering is to provide an overview of the different fields of research and development that contribute to the advancement of the field, to assess the potential applications of neuromorphic technology in cutting edge technologies and to highlight the necessary advances required to reach these. The roadmap addresses:

- Neuromorphic materials and devices

- Neuromorphic circuits

- Neuromorphic algorithms

- Applications

- Ethics

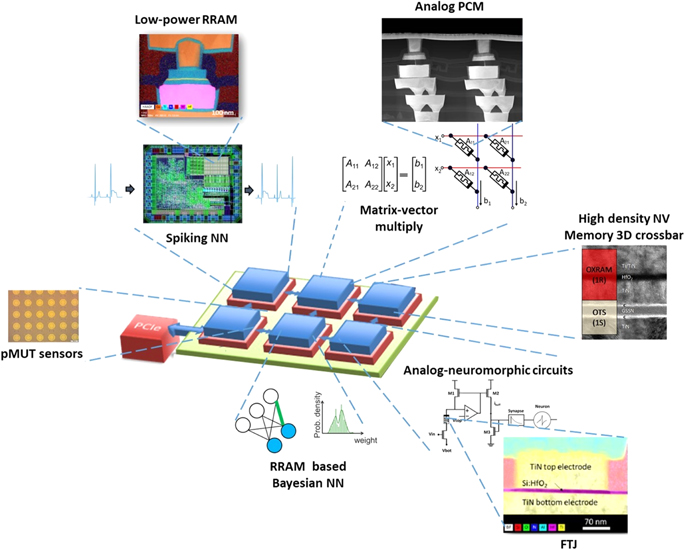

Neuromorphic materials and devices: To advance the field of neuromorphic computing and engineering, the exploration of novel materials and devices will be of key relevance in order to improve the power efficiency and scalability of state-of-the-art CMOS solutions in a disruptive manner [4, 8]. Memristive devices, which can change their conductance in response to electrical pulses [9–11], are promising candidates to act as energy- and space-efficient hardware representation for synapses and neurons in neuromorphic circuits. Memristive devices have originally been proposed as binary non-volatile random-access memory and research in this field has been mainly driven by the search for higher performance in solid-state drive technologies (e.g., flash replacement) or storage class memory [12]. However, thanks to their analog tunability and complex switching dynamics, memristive devices also enable novel computing functions such as analog computing or the realisation of brain-inspired learning rules. A large variety of different physical phenomena has been reported to exhibit memristive behaviour, including electronic effects, ionic effects as well as structural or ferroic ordering effects. The material classes range from magnetic alloys, metal oxides, chalcogenides to 2D van de Waals materials or organic materials. Within this roadmap, we cover a broad range of materials and phenomena with different maturity levels with respect to their use in neuromorphic circuits. We consider emerging memory devices that are already commercially available as binary non-volatile memory such as phase-change memory (PCM), magnetic random-access memory, ferroelectric memory as well as redox-based resistive random-access memory and review their prospects for neuromorphic computing and engineering. We complement it with nanowire networks, 2D materials, and organic materials that are less mature but may offer extended functionalities and new opportunities for flexible electronics or 3D integration.

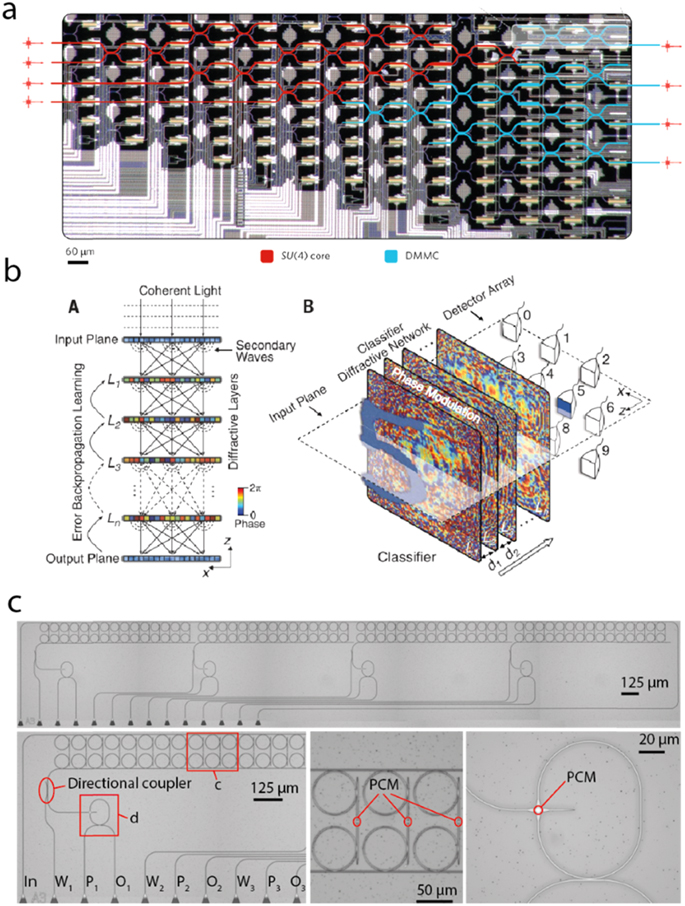

Neuromorphic circuits: Neuromorphic devices can be integrated with conventional CMOS transistors to develop fully functional neuromorphic circuits. A key element in neuromorphic circuits is their non-von Neumann architecture, for instance consisting of multiple cores each implementing distributed computing and memory. Both SSNs, adopting spikes to represent, exchange and compute data in analogy to action potentials in the brain, as well as circuits that are only loosely inspired by the brain, such as ANNs, are generally included in the roster of neuromorphic circuits, thus will be covered in this roadmap. Regardless of the specific learning and processing algorithm, a key processing element in neuromorphic circuits is the neural network, including several synapses and neurons. Given the central role of the neural network, a significant research effort is currently aimed at technological solutions to realize dense, fast, and energy-efficient neural networks by inmemory computing [13]. For instance, a memory array can accelerate the matrix-vector multiplication (MVM) [14]. This is a common feature of many neuromorphic circuits, including spiking and non-spiking networks, and takes advantage of Ohm's and Kirchhoff's laws to implement multiplication and summation in the network. The MVM crosspoint circuit allows for the straightforward hardware implementation of synaptic layers with high density, high real-time processing speed, and high energy efficiency, although the accuracy is challenged by stochastic variations in memristive devices in particular, and analog computing in general. An additional circuit challenge is the mixed analog-digital computation, which results in the need for large and energyhungry analog-digital converter circuits at the interface between the analog crosspoint array and the digital system. Finally, neuromorphic circuits seem to take the most benefit from hybrid integration, combining front-end CMOS technology with novel memory devices that can implement MVM and neuro-biological functions, such as spike integration, short-term memory, and synaptic plasticity [15]. Hybrid integration may also need to extend, in the long term, to alternative nanotechnology concepts, such as bottom-up nanowire networks [16], and alternative computing concepts, such as photonic [17] and even quantum computing [18], within a single system or even a single chip with 3D integration. In this scenario, a roadmap for the development and assessment of each of these individual innovative concepts is essential.

Neuromorphic algorithms: A fundamental challenge in neuromorphic engineering for real application systems is to train them directly in the spiking domain in order to be more energy-efficient, more precise, and also be able to continuously learn and update the knowledge on the portable devices themselves without relying on heavy cloud computing servers. Spiking data tend to be sparse with some stochasticity and embedded noise, interacting with non-ideal non-linear synapses and neurons. Biology knows how to use all this to its advantage to efficiently acquire knowledge from the surrounding environment. In this sense, computational neuroscience can be a key ingredient to inspire neuromorphic engineering, and learn from this discipline how brains perform computations at a variety of scales, from small neurons ensembles, mesoscale aggregations, up to full tissues, brain regions and the complete brain interacting with peripheral sensors and motor actuators. On the other hand, fundamental questions arise on how information is encoded in the brain using nervous spikes. Obviously, to maximize energy efficiency for both processing and communication, the brain maximizes information per unit spike [19]. This means unravelling the information encoding and processing by exploiting spatio-temporal signal processing to maximize information while minimizing energy, speed, and resources.

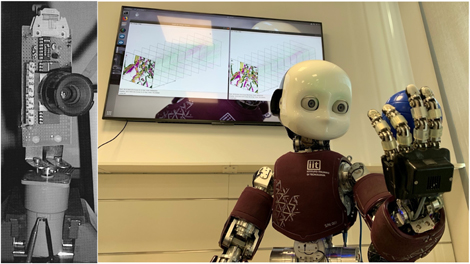

Applications: The realm of applications for neuromorphic computing and engineering continues to grow at a steady rate, although remaining within the boundaries of research and development. While it is becoming clear that many applications are well suited to neuromorphic computing and engineering, it is also important to identify new potential applications to further understand how neuromorphic materials and hardware can address them. The roadmap includes some of these emerging applications as examples of biologically-inspired computing approaches for implementation in robots, autonomous transport capability or in perception engineering where the applications are based on integration with sensory modalities of humans.

Ethics: While the future development and application of neuromorphic systems offer possibilities beyond the state of the art, the progress should also be addressed from an ethical point of view where, e.g., lack of transparency in complex neuromorphic systems and autonomous decision making can be a concern. The roadmap thus ends with a final section addressing some of the key ethical questions that may arise in the wake of advancements in neuromorphic computation.

We hope that this roadmap represents an overview and updated picture of the current state-of-the-art as well as being the future projection in these exciting research areas. Each contribution, written by leading researchers in their topic, provides the current state of the field, the open challenges, and a future perspective. This should guide the expected transition towards efficient neuromorphic computations and highlight the opportunities for societal impact in multiple fields.

Acknowledgements

DVC and NP acknowledge the funding from Novo Nordic Foundation Challenge Program for the BioMag project (Grant No. NNF21OC0066526), Villum Fonden, for the NEED project (00027993), Danish Council for Independent Research Technology and Production Sciences for the DFF Research Project 3 (Grant No. 00069B), the European Union's Horizon 2020, Future and Emerging Technologies (FET) programme (Grant No. 801267) and Danish Council for Independent Research Technology and Production Sciences for the DFF-Research Project 2 (Grant No. 48293). RD acknowledges funding from the German Science foundation within the SFB 917 'Nanoswitches', by the Helmholtz Association Initiative and Networking Fund under Project Number SO-092 (Advanced Computing Architectures, ACA), the Federal Ministry of Education and Research (project NEUROTEC Grant No. 16ES1133K) and the Marie Sklodowska-Curie H2020 European Training Network, 'Materials for neuromorphic circuits' (MANIC), grant Agreement No. 861153. BLB acknowledges funding from the European Union's Horizon 2020 (Grants 824164, 871371, 871501, and 899559). DI acknowledges funding from the European Union's Horizon 2020 (Grants 824164, 899559 and 101007321).

Section 1. Materials and devices

1. Phase-change memory devices

Abu Sebastian1, Manuel Le Gallo1 and Andrea Redaelli2

1IBM Research - Zurich, Switzerland

2ST Microelectronics, Agrate, Italy

1.1. Status

PCM exploits the behaviour of certain phase-change materials, typically compounds of Ge, Sb and Te, that can be switched reversibly between amorphous and crystalline phases of different electrical resistivity [20]. A PCM device consists of a certain nanometric volume of such phase change material sandwiched between two electrodes (figure 1).

Figure 1. Key physical attributes that enable neuromorphic computing. (a) Non-volatile binary storage facilitates in-memory logical operations relevant for applications such as hyper-dimensional computing. (b) Analog storage enables efficient matrix-vector multiply (MVM) operations that are key to applications such as deep neural network (DNN) inference. (c) The accumulative behaviour facilitates applications such as DNN training and emulation of neuronal and synaptic dynamics in SNN.

Download figure:

Standard image High-resolution imageIn recent years, PCM devices are being explored for brain-inspired or neuromorphic computing mostly by exploiting the physical attributes of these devices to perform certain associated computational primitives in-place in the memory itself [13, 21]. One of the key properties of PCM that enables such inmemory computing (IMC) is simply the ability to store two levels of resistance/conductance values in a non-volatile manner and to reversibly switch from one level to the other (binary storage capability). This property facilitates in-memory logical operations enabled through the interaction between the voltage and resistance state variables [21]. Applications of in-memory logic include database query [22] and hyper-dimensional computing [23].

Another key property of PCM that enables IMC is its ability to achieve not just two levels but a continuum of resistance values (analogue storage capability) [20]. This is typically achieved by creating intermediate phase configurations through the application of partial RESET pulses. The analogue storage capability facilitates the realization of MVM operations in O(1) time complexity by exploiting Kirchhoff's circuit laws. The most prominent application for this is DNN inference [24]. It is possible to map each synaptic layer of a DNN to a crossbar array of PCM devices. There is a widening industrial interest in this application owing to the promise of significantly improved latency and energy consumption with respect to existing solutions. This in-memory MVM operations also enable non-neuromorphic applications such as linear-solvers and compressed sensing recovery [21].

The third key property that enables IMC is the accumulative property arising from the crystallization kinetics. This property can be utilized to implement DNN training [25, 26]. It is also the central property that is exploited for realizing local learning rules like spike-timing-dependent plasticity in SNN [27, 28]. In both cases, the accumulative property is exploited to implement the synaptic weight update in an efficient manner. It has also been exploited to emulate neuronal dynamics [29].

Note that, PCM is at a very high maturity level of development with products already on the market and a well-established roadmap for scaling. This fact, together with the ease of embedding PCM on logic platforms (embedded PCM) [30] make this technology of unique interest for neuromorphic computing and IMC in general.

1.2. Current and future challenges

PCM devices have several attractive properties such as the ability to operate them at timescales on the order of tens of nanoseconds. The cycling endurance is orders of magnitude higher for PCM compared to other non-volatile memory devices such as flash memory. The retention time can also be tuned relatively easily with the appropriate choice of materials, although the retention time associated with the intermediate phase configurations could be substantially lower than that of the full amorphous state.

However, there are also several device-level challenges as shown in figure 2. One of the key challenges associated with the use of PCM for in-memory logic operations is the wide distribution of the SET states. These distributions could detrimentally impact the evaluation of logical operations. The central challenge associated with in-memory MVM operations is the limited precision arising from the 1/f noise as well as conductance drift. Drift is attributed to the structural relaxation of the melt-quenched amorphous phase [31]. Temperature-induced conductance variations could also pose challenges. One additional challenge is related to the stoichiometric stability during cycling where ion migration effects can occur [32]. Moreover, the accumulative behaviour in PCM is highly nonlinear and stochastic. While one could exploit this intrinsic stochasticity to realize stochastically firing neurons and for stochastic computing, this behaviour is detrimental for applications such as DNN training in which the conductance must be precisely modulated.

Figure 2. Key challenges associated with PCM devices. (a) The SET/RESET conductance values exhibit broad distributions which is detrimental for applications such as in-memory logic. (b) The drift and noise associated with analogue conductance values results in imprecise matrixvector multiply operations. (c) The nonlinear and stochastic accumulative behaviour result in imprecise synaptic weight updates.

Download figure:

Standard image High-resolution imagePCM-based IMC has the potential for ultra-high compute density since PCM devices can be scaled to nanoscale dimensions. However, it is not straightforward to fabricate such devices in a large array due to fabrication challenges such as etch damage and deposition of materials in high-aspect ratio pores [33]. The integration density is also limited by the access device, which could be a selector in the backend-of-the-line (BEOL) or front-end bipolar junction transistors (BJT) or metal-oxide-semiconductor field effect transistors (MOSFET). The threshold voltage must be overcome when SET operations are performed, so the access device must be able to manage voltages at least as high as the threshold voltage. While MOSFET selector size is mainly determined by the PCM RESET current, the BJT and BEOL selectors can guarantee a minimum cell size of 4F2, leading to very high density [34]. However, BEOL selector-based arrays have some drawbacks in terms of precise current control, while the management of parasitic drops is more complex for BJT-based arrays [35].

1.3. Advances in science and technology to meet challenges

A promising solution towards addressing the PCM nonidealities such as 1/f noise and drift is that of projected phase-change memory (projected PCM) [36, 37]. In these devices, there is a non-insulating projection segment in parallel to the phase-change material segment. By exploiting the highly nonlinear I–V characteristics of phase-change materials, one could ensure that during the SET/RESET process, the projection segment has minor impact on the operation of the device. An increase in the reset current is anyway expected and some work should be done on material engineering side to compensate for that. However, during read, the device conductance is mostly determined by the projection segment that appears parallel to the amorphous phase-change segment. Recently, it was shown that it is possible to achieve remarkably high precision in-memory scalar multiplication (equivalent to 8 bit fixed point arithmetic) using projected PCM devices [38]. These projected PCM devices also facilitate array-level temperature compensation schemes. Alternate multi-layered PCM devices have also been proposed that exhibit substantially lower drift [39].

There is a perennial focus on trying to reduce the RESET current via scaling the switchable volume of the PCM device. Either by shrinking the overall dimension of the device in a confined geometry or by scaling the bottom electrode dimensions of a mushroom-type device. The exploration of new material classes such as single elemental antimony could help with the scaling challenge [40].

The limited endurance and various other non-idealities associated with the accumulative behaviour such as limited dynamic range, nonlinearity and stochasticity can be partially circumvented with multiPCM synaptic architectures. Recently, a multi-PCM synaptic architecture was proposed that employs an efficient counter-based arbitration scheme [41]. However, to improve the accumulation behaviour at the device level, more research is required on the effect of device geometries as well as the randomness associated with crystal growth.

Besides conventional electrical PCM devices, photonic memory devices based on phase-change materials, which can be written, erased, and accessed optically, are rapidly bridging a gap towards allphotonic chip-scale information processing. By integrating phase-change materials onto an integrated photonics chip, the analogue multiplication of an incoming optical signal by a scalar value encoded in the state of the phase change material was achieved [42]. It was also shown that by exploiting wavelength division multiplexing, it is possible to perform convolution operations in a single time step [43]. This creates opportunities to design phase-change materials that undergo faster phase transitions and have a higher optical contrast between the crystalline and amorphous phases [44].

1.4. Concluding remarks

The non-volatile binary storage, analogue storage and accumulative behaviour associated with PCM devices can be exploited to perform in-memory computing (IMC). Compared to other non-volatile memory technologies, the key advantages of PCM are the well understood device physics, volumetric switching and easy embeddability in a CMOS platform. However, there are several device and fabrication-level challenges that need be overcome to enable PCM-based IMC and this is an active area of research.

It will also be rather interesting to see how PCM-based neuromorphic computing will eventually be commercialized. Prior to true IMC, a hybrid architecture where PCM memory chips are used to store synaptic weights in a non-volatile manner while the computing is performed in a stacked logic chip is likely to be considered as an option by the industry. Despite the tight interconnect between the stacked chips, data transfer will remain a bottleneck for this approach. A better solution could be PCM directly embedded with the logic itself BEOL without any interconnect bottleneck and eventually we could foresee full-fledged non-von Neumann accelerator chips where the embedded PCM is also used for analogue IMC.

Acknowledgements

This work was supported in part by the European Research Council through the European Union's Horizon 2020 Research and Innovation Programme under Grant No. 682675.

2. Ferroelectric devices

Stefan Slesazeck1 and Thomas Mikolajick1,2

1NaMLab gGmbH

2Institute of Semiconductors and Microsystems, TU Dresden, Dresden, Germany

2.1. Status

Ferroelectricity was firstly discovered in 1920 by Valasek in Rochelle salt [45] and describes the ability of a non-centrosymmetric crystalline material to exhibit a permanent and switchable electrical polarization due to the formation of stable electric dipoles. Historically, the term ferroelectricity stems from the analogous behavior with the magnetization hysteresis of ferromagnets when plotting the ferroelectric polarization versus the electrical field. Regions of opposing polarization are called domains. The polarization direction of such domains can be switched typically by 180° but, based on the crystal structure, other angles are also possible. Since the discovery of the stable ferroelectric barium titanate in 1943, ferroelectrics have found application in capacitors in electronics industry. Already, in the 1950s, ferroelectric capacitor (FeCAP) based memories (FeRAM) have been proposed [46], where the information is stored as polarization state of the ferroelectric material. Read and write operation are performed by applying an electric field larger than the coercive field EC. The destructive read operation determines the switching current of the FeCAP upon polarization reversal, thus requiring a write-back operation after readout. Thanks to the development of mature processing techniques for ferroelectric lead zirconium tantalate FeRAMs, these have been commercially available since the early 1990s [47]. However, the need for a sufficiently large capacitor together with the limited thin-film manufacturability of perovskite materials has so far restricted their use to niche applications [48].

The ferroelectric field effect transistors (FeFET) that was proposed in 1957 [49] features a FeCAP as gate insulator, modulating the transistor's threshold voltage that can be sensed non-destructively by measuring the drain-source current. Perovskite based FeFET memory arrays with up to 64 kBit have been demonstrated [50]. However, due to difficulties in the technological implementation, limited scalability and data retention issues, no commercial devices became available.

The ferroelectric tunneling junction (FTJ) was proposed by Esaki et al in 1970 s as a 'polar switch' [51] and was firstly demonstrated in 2009 using a BaTiO3 ferroelectric layer [52]. The FTJ features a ferroelectric layer sandwiched between two electrodes, thus modifying the tunneling electro-resistance. A polarization-dependent current is measured non-destructively when applying electrical fields smaller than EC.

Since the fortuitous discovery of ferroelectricity in hafnium oxide (HfO2) in 2008 and its first publication in 2011 [53] the well-established and CMOS-compatible fluorite-structure material has been extensively studied and has recently gained a lot of interest in the field of nonvolatile memories and beyond von-Neumann computing [54, 55] (figure 3).

Figure 3. The center shows two typical ferroelectric crystals and the corresponding PV-hysteresis curve. The top figure illustrates (a) FeCAP based FeRAM, the figure on the bottom left shows a FeFET and the bottom right an FTJ.

Download figure:

Standard image High-resolution image2.2. Current and future challenges

Very encouraging electrical results of fully front-end-of-line integrated FeFET devices featuring switching speeds <50 ns at <5 V pulse voltage have been reported recently based on >1 Mbit memory arrays [56]. The ability of fine-grained co-integration of FeFET memory devices together with CMOS logic transistors paves the way for the realization of braininspired architectures to overcome the limitations of the von-Neumann bottleneck, which restricts the data transfer due to limited memory and data bus bandwidth [57]. However, one of the main challenges for the FeFET devices and therefore a topic of intense research is the formation of ferroelectric HfO2-based thin films featuring a uniform polarization behavior at nano-scale as an important prerequisite for the realization of small scaled devices with feature sizes <100 nm.

Another important challenge for many application cases is the limited cycling endurance of silicon-based FeFETs that is typically in the range of 105 cycles. This value is mainly dictated by the breakdown of the dielectric SiO2 interfacial layer that forms between the Si channel and the ferroelectric gate insulator.

FeCAPs have been successfully integrated into the back-end-of-line (BEOL) of modern CMOS technologies and operation of a HfO2-based FeRAM memory array at 2.5 V and 14 ns switching pulses was successfully demonstrated [58]. At this point the main challenge is the decrease of the ferroelectric layer thickness well below 10 nm to allow scaling of 3D capacitors towards the 10 nm node. Moreover, phenomenon such as the so called 'wake-up effect' with increasing of Pr for low cycle counts as well as the 'fatigue effect' resulting in a reduction of Pr at high cycle counts due to oxygen vacancy redistribution [59] and defect generation have to be tackled. That is especially important for fine-grained circuit implementations where the switching properties of single ferroelectric devices impact the designed operation point of analogue circuits.

One of the most interesting benefits of FTJ devices is the small current density making them very attractive for applications requiring massive parallel operations such as analogue matrixvector-multiplications in larger cross-bar structures [60]. However, increasing the ratio between the on-current density and the self-capacitance of FTJ devices turns out to be one of the main challenges to increasing the reading speed for these devices. The tunneling current densities depend strongly on the thickness of the ferroelectric layer and the composition of the multi-layer stacks. The formation of very thin ferroelectric layers is hindered by unintentional formation of interfacial dead layers towards the electrodes and increasing leakage currents due to defects and grain-boundaries in the poly-crystalline thin films.

2.3. Advances in science and technology to meet challenges

Although ferroelectricity in hafnium oxide has been extensively studied for over one decade now, there are still many open questions in understanding the formation of the ferroelectric Pca21 phase and regarding the interaction with material layers such as electrodes, dielectric tunneling barriers in multi-layer FTJs or interfacial layers in FeFETs. Moreover, the interplay between charge trapping phenomenon and ferroelectric switching mechanisms [61], the trade-off between switching speed and voltage of the nucleation limited switching and its impact on device reliability or the different behavior of abrupt single domain switching [55] and smooth polarization transitions in negative capacitance devices that were observed in the very similar material stacks are still not completely understood. However, that knowledge will be an important ingredient for proper optimization of material stacks as well as electrical device operation conditions.

On the materials side, the stabilization of the ferroelectric orthorhombic Pca21 phase in crystallized HfO2 thin films has to be optimized further. Adding dopants, changing oxygen vacancy densities or inducing stress by suitable material stack and electrode engineering are typical measures. In most cases a poly-crystalline material layer is attained consisting of a mixture of different crystalline ferroelectric and non-ferroelectric phase fractions. Moreover, ferroelectric grains that differ in size or orientation of the polarization axis, electronically active defects as well as grain size dependent surface energy effects give rise to the formation of ferroelectric domains that possess different electrical properties in terms of coercive field EC (typical values ∼1 MV cm−1) or remnant polarization Pr (typical values 10–40 μC cm−2) with impact on the device-to-device variability and the gradual switching properties that are important especially for analog synaptic devices. Some drawbacks of the poly-crystallinity of ferroelectric HfO2- and ZrO2-based thin films could be tackled by the development of epitaxial growth of monocrystalline ferroelectric layers [62] where domains might extend over a larger area. The case of FTJs in particular demonstrate the effect of domain wall motion that might allow a more gradual and analogue switching behavior even in small scaled devices. The utilization of an anti-ferroelectric hysteretic switching that was demonstrated in ZrO2 thin films bears the potential to overcome some limitations that are related to the high coercive field of ferroelectric HfO2, such as operation voltages being larger than the typical core voltages in modern CMOS technologies or the limited cycling endurance [63].

Finally, in addition to the very encouraging results adopting ferroelectric HfO2, in 2019 another promising material was realized. AlScN is a semiconductor processing compatible and already utilized piezoelectric material that was made ferroelectric [64] (figure 4).

Figure 4. Main elements of a neural network. Neurons can be realized using scaled down FeFETs [55] while synapses can be realized using FTJs [54] or medium to large scale FeFETS. Adapted with permission from [54]. Copyright (2020) American Chemical Society and [55]. Copyright (2018) the Royal Society of Chemistry.

Download figure:

Standard image High-resolution image2.4. Concluding remarks

The discovery of ferroelectricity in hafnium oxide has led to a resumption in the research on ferroelectric memory devices, since hafnium oxide is a well-established and fully CMOS compatible material in both front end of line and back end of line processing. Besides the expected prospective realization of densely integrated non-volatile and ultra-low-power ferroelectric memories in near future, this development directly leads to the adoption of the trinity of ferroelectric memory devices—FeCAP, FeFET and FTJ—for beyond von Neumann computing. While in the memory application the important topic of reliability on the array level is yet to be solved, for neuromorphic applications the linear switching to many different states, especially in scaled down devices, is a topic that needs further attention. Moreover, very specific properties of the different ferroelectric device types demand for the development of new circuit architectures that facilitate a proper device operation taking into account the existing non-idealities. A thorough design technology co-optimization will be the key to fully exploit their potential in neuromorphic and edge computing. Finally, large scale demonstrations of ferroelectrics based neuromorphic circuits need to be investigated to identify all possible issues.

Acknowledgements

This work was financially supported out of the State budget approved by the delegates of the Saxon State Parliament.

3. Valence change memory

Sabina Spiga1 and Stephan Menzel2

1CNR-IMM, Unit of Agrate Brianza, via C. Olivetti 2, Agrate Brianza (MB), Italy

2FZ Juelich (PGI-7), Juelich, Germany

3.1. Status

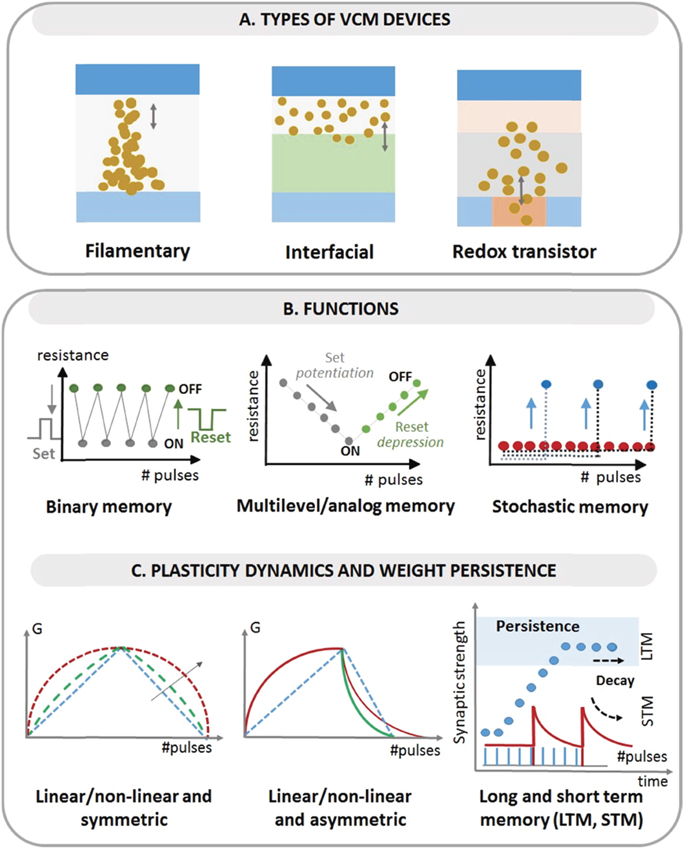

Resistive random access memories (RRAMs), also named memristive devices, change their resistance state upon electrical stimuli. They can store and compute information at the same time, thus enabling in-memory and brain-inspired computing [13, 65]. RRAM devices relying on oxygen ion migration effects and subsequent valence changes are named valence change memory (VCM) [66]. They have been proposed to implement electronic synapses in hardware neural networks, due to the ability to adapt their strength (conductance) in an analogue fashion as a function of incoming electrical pulses (synaptic plasticity), leading to long-term (short-term) potentiation and depression. In addition, learning rules such as spike-time or spike-rate dependent plasticity, paired-pulse facilitation or the voltage threshold—based plasticity have been demonstrated; the stochasticity of the switching process has been exploited for stochastic update rules [67–69]. Most of the VCM devices are based on a two-terminal configuration, and the switching geometry involves either confined filamentary, or interfacial regions (figure 5(A)). Filamentary VCMs are today the most advanced in terms of integration and scaling. Their switching mechanism relies on the creation and rupture of conductive filaments (CF), formed by a localized concentration of defects, shorting the two electrodes. The modulation/control of the CF diameter and/or CF dissolution can lead to two or multiple stable resistance states [70, 71]. Prototypes of neuromorphic chips have been recently shown, integrating HfOx and TaOx -based filamentary-VCM as synaptic nodes in combination with CMOS neurons [72–74]. In interfacial VCM devices, the conductance scales with the junction area of the device, and the mechanism is related to a homogenous oxygen ion movement through the oxides, either at the electrode/oxide or oxide/oxide interface. Reference material systems are based on complex oxides, such as bismuth ferrite [75] and praseodymium calcium manganite [76]; or bilayers stacks, e.g. TiO2/TaO2 [77] and a-Si/TiO2 [78]. Finally, three-terminal VCM redox transistors have been recently studied (figure 5(A) right), where the switching mechanism is related to the control of the oxygen vacancy concentration in the bulk of the transistor channel [79, 80]. While interfacial and redox-transistor devices are today at low technological readiness, and most of the studies are reported at single device level, they promise future advancement in neuromorphic computing in terms of analogue control, higher resistance values, improved reliability, reduced stochasticity with respect to filamentary devices [81]. To design neuromorphic circuits including VCM devices, compact models are requested. For filamentary devices compact models including variability are available [81, 82], but lacking for interfacial VCM and redox-based transistors.

Figure 5. (A) Sketch of the three types of VCM devices (filamentary, interfacial and redox transistor). (B) Possible functionalities that can be implemented by VCM devices, namely binary memory (left), analog/multilevel (centre) and stochastic (right) memory. In the figures, the device resistance evolution is plotted as a function of applied electrical stimuli (pulses). (C) Schematic drawing of some of the interesting properties of VCM for neuromorphic applications, i.e. synaptic plasticity dynamics and type of memory with different long or short retention scales (LTM, STM). Many experimental VCM devices show a non-linear and asymmetric modulation of the conductance (G) update, but plasticity dynamics can be as well modulated by programming strategies or materials engineering.

Download figure:

Standard image High-resolution image3.2. Current and future challenges

VCM devices have been developed in the last 15 years mainly for storage applications, but for neuromorphic applications the required properties differ. In general, desirable properties of memories for neural networks include (i) analogue behaviour or controllable multilevel states, (ii) compatibility with learning rules supporting also online learning, (iii) tuneable short-term and long-term stability of the weights to implement various dynamics and timescales in synaptic and neuronal circuits [67–69]. A significant debate still refers to the linear/non-linear and symmetric/asymmetric conductance update of experimental devices, synaptic resolution (number of resistance levels), and how to exploit or mitigate these features (figures 5(B) and (C)).

Filamentary devices are the most mature type of VCMs. Nevertheless, many issues are pending: e.g. control of multi-level operation, device variability, intrinsic stochasticity, program and read disturbs, and the still too low resistance level range for neuromorphic circuits [83]. Moreover, the understanding/modelling of their switching mechanism is still under debate. Whereas first models including switching variability and read noise are available [81, 82], retention modelling, and the modelling of volatile effects and device failures are current challenges. First hybrid CMOS-VCM chips have been developed demonstrating inference application, but so far they do not support on-chip learning [72–74].

Interfacial VCM devices show in general less variability, less (no) read instability and a very analogue tuning of the conductance states, which can leads to a more deterministic and linear conductance update compared to filamentary devices [76]. Still these properties are not characterized on a high statistical basis. The retention, especially for thin oxide devices, is lower than for filamentary devices, which may be still compatible with some applications. As the conductance scales with area, the achievable high resistance levels promise a low power operation. Typical devices, however, have a large area or thick switching oxides, and scaling them to the nanoscale is an open issue. Moreover, devices showing a large resistance modulation require high switching voltages, not easily compatible with scaled CMOS nodes. The fabrication and characterization of interfacial VCM arrays needs to be further addressed. Simulation models for interfacial VCM are not available yet and need to be developed.

Redox-based VCM transistors have been only shown on a single device level [79, 80]. Thus, reliable statistical data on cycle-to-cycle variability, device-to-device variability and stability of the programmed states is not available yet. Moreover, the trade-off between switching speed and voltage has not been studied in detail. Another challenge is the understanding of the switching mechanism and the development of suitable models for circuit design.

The open challenges for all three types of VCM devices are summarized in table 1.

Table 1. Summary of status and open challenges of the three types of VCM devices.

| Filamentary | Interfacial | Redox transistor | |

|---|---|---|---|

| Single device | Various materials | Various materials, | Recently studied, |

| systems and scaling | scaling at nm scales, still | promising but still at | |

| down to mm scale, low | overall high V and thick | initial development | |

| V operation | oxide | stage | |

| Array | Demonstrated as 1T-1R | Published in few works, | |

| and 1S-1R. Total | up to few Kb, mainly 1R | To be addressed | |

| capacity up to 1–2 Mb in | array only | ||

| recent works | |||

| Monolithic CMOS integration | Demonstrated, down to | Partial demonstration, | |

| the 2× techn. nodes, | mainly for oxide bilayer | To be addressed | |

| and in 3D architectures | and not for perovskites | ||

| Compact models | Available, retention and | ||

| variability modelling to | Almost not availalbe | Almost not available | |

| be optimized | |||

| Binary | Available, with good | Possible, but lack of | Very new devices, |

| endurance (>106–109) | statistical data. | mostly proposed for | |

| and retention (>years) | Endurance and long | multilevel | |

| retention to be | applications, lack of | ||

| optimized | statistical data | ||

| Analog multilevel memory | Hard to control, | Promising, less | Promising, high R |

| multilevel | variability, high R, but | value, deterministic | |

| demonstrated in array | reduced dynamic range | control of G update, | |

| using program/verify | and mainly data for | but shown for single | |

| algorithms | single devices | devices | |

| Stochastic memory | Proposed in some | — | — |

| works, to be further | |||

| validated | |||

| Long term memory (LTM) | Yes, retention at high T | Possible, lack of statistic | Possible. Few studies. |

| and for 6–10 years. | data on array, single | Lack of statistical data | |

| Depends on R levels | device retention up to | ||

| years for some material | |||

| stacks | |||

| Short term memory (STM) | Usually difficult to | Possible, to be further | Possible, to be further |

| achieve controlled | addressed and | addressed. Few | |

| decay | optimized | studies |

3.3. Advances in science and technology to meet challenges

The current challenges for VCM-type devices push the research in various but connected directions, which span from material, to theory, devices and architecture. A better understanding of material properties and microscopic switching mechanisms is definitely required. However, the key step is to demonstrate the device integration in complex circuits and hybrid CMOS-VCM hardware neuromorphic chips. While VCMs are not ideal devices, many issues can be solved or mitigated at circuit level still taking advantage of their properties in term of power, density, and dynamic properties.

In this context, filamentary VCM devices are the most mature technology, but their deployment into neuromorphic computing hardware is still at its infancy. A comprehensive compact model, depicting complete dynamics including retention effects, e.g. to accurately simulate online learning, is required for the development of optimized circuits. On the material level, the biggest issues are read noise and switching variability. Due to the inherent Joule heating effect, the transition time of the conductance switching is very short and depends strongly on the device state [84]. This makes it hard to control the conductance update. Future research could explore very fast pulses in the range of the transition time to update the cell conductance, or use thermal engineering of the device stacks to increase the transition time. Finally, to achieve low power operation, resistance state values should be moved to the MΩ regime.

For interfacial and redox-transistor VCM devices, one of the next important steps is to shift from single device research to large arrays, possibly co-integrated with CMOS. This step enables to collect a large amount of data, which is required for modelling and demonstrating robust neuromorphic functions. It would be highly desirable to identify a reference material system with a robust switching mechanism supported by a comprehensive understanding and modelling from underlying physics to compact and circuits modelling. Indeed, the modelling of these devices are still at its infancy. One open question for both devices is the trade-off between data retention and switching speed. In contrast to the filamentary devices, the velocity of the ions are probably not accelerated by Joule heating. Thus, the voltage needs to be increased more than in filamentary devices, to operate the devices at fast speed [85]. This might limit the application of these device to a certain time domain as the CMOS might not be able to provide the required voltage. By using thinner device layers or material engineering this issue could be addressed.

3.4. Concluding remarks

The VCM device technologies can integrate novel functionalities in hardware as key elements of the synaptic nodes in neural networks, i.e. to store the synaptic weight. Moreover, they can enable new learning algorithms that enable bio-plausible functions over multiple timescales. At the moment, it is still not clear which can be the best 'final' VCM material system and/or VCM device type, having each of them advantages and disadvantages. The missing 'killer' system, with consolidated properties/understanding/easy manufacturing, prevents to concentrate the efforts of the scientific community in single direction to bring VCM device to industrial real applications beyond a niche market. While filamentary VCMs are already been implemented in neuromorphic computing hardware, interfacial VCM or redox transistor can open new perspectives in the long term. To this end, there is an urgent request to further develop VCM devices enhancing new properties through a combined synergetic development based on materials design, physical and electrical characterizations and multiscale modelling to support the microscopic understanding of the link between the device structure and the electrical characteristics. Moreover, the device development targeting braininspired computing systems can only go hand-in-hand with theory and architectures design in a holistic view.

Acknowledgements

This work was partially supported by the Horizon 2020 European projects MeM-Scales (Grant No. 871371), MNEMOSENE (Grant No. 780215), and NEUROTECH (Grant No. 824103); in part by the Deutsche Forschungsgemeinschaft (SFB 917); in part by the Helmholtz Association Initiative and Networking Fund under Project Number SO-092 (Advanced Computing Architectures, ACA) and in part by the Federal Ministry of Education and Research (BMBF, Germany) in the project NEUROTEC (Project Numbers 16ES1134 and 16ES1133K).

4. Electrochemical metallization cells

Ilia Valov

Research Centre Juelich

4.1. Status

Electrochemical metallization memories were introduced in nanoelectronics with the intention to be used as memory, optical, programmable resistor/capacitor devices, sensors and for crossbar arrays and rudimentary neuromorphic circuits by Kozicki et al [86, 87] under the name programmable metallization cells. These types of devices are also termed conductive bridging random access memories or atomic switches [88]. The principles of operation of these two electrode devices using thin layers as ion transporting media are schematically shown in figure 6. As electrochemically active electrodes Ag, Cu, Fe or Ni are mostly used and for counter electrodes Pt, Ru, Pd, TiN or W are preferred. Electrochemical reactions at the electrodes and ionic transport within the device are triggered by internal [89] or applied voltage causing the formation of metallic filament (bridge) short-circuiting the electrodes and defining the low resistance state (LRS). Voltage of opposite polarity is used to dissolve the filament, returning the resistance to high ohmic state (HRS). LRS and HRS are used to define Boolean 1 and 0, respectively.

Figure 6. Principle operation and current–voltage characteristics of electrochemical metallization devices. The individual physical processes are related to the corresponding part of the I–V dependence. The figure is reproduced from [99].

Download figure:

Standard image High-resolution imageApart from the prospective for a paradigm shift in computing and information technology offered by memrsitive devices in general [8], ECMs provide particular advantages compared to other redox-based resistive memories. They operate at low voltages (∼0.2 V to ∼1 V) and currents (from nA to μA range) allowing for low power consumption. A huge spectrum of materials can be used as solid electrolytes, ionic conductors, mixed conductors, semiconductors, macroscopic insulators and even high-k materials such as SiO2, HfO2, Ta2O5 etc, predominantly in amorphous but also in crystalline state [90]. The spectrum of these materials includes 1D and 2D materials but also different polymers, bioinspired/bio-compatible materials, proteins and other organic and composite materials [91, 92]. The metallic filament can vary in thickness and may either completely bridge the device, or be only partially dissolved providing multilevel to analog behaviour. Very thin filaments are extremely unstable and dissolve fast (down to 1010 s) [93]. The devices are stable against radiation/cosmic rays, high energy particles and electromagnetic waves and can operate over a large temperature range [94, 95]. Due to these properties, ECMs can be implemented in various environments, systems and technologies. The typical applications are as selector devices, volatile, non-volatile digital and analog memories, transparent and flexible devices, sensors, artificial neurons and synapses [96–98]. The devices can combine more functions and are thought of as basic units for the fields of autonomous systems, beyond von Neumann computing and AI. Further development in the field is essential to realise the full potential of this technology.

4.2. Current and future challenges

Despite the apparent simplicity and ease of operation, ECM cells are complex nanoscale systems, relying on redox reactions and ion transport at extreme conditions [100]. Despite low absolute voltages and currents, the devices are exposed to electric fields of up to 108 V cm−1 and current densities of up to ∼1010 A cm−2. There is no other example in the entire field of electrochemical applications even approaching these conditions. Small device volume, harsh and strongly non-equilibrium conditions make the understanding of fundamental processes and their control extremely challenging. The latter results in less precise (or missing) control over the functionalities and reliable operation. Indeed, maybe the most serious disadvantage of ECMs is the large variability in switching voltages, currents and resistive states. Additional problems are fluctuations and drift of the resistance states, as well their chemically and/or physically determined instabilities (figure 7).

Figure 7. Schematic differences between ideal cells (left) and real cells accounting for interface interactions occurring due to sputtering conditions, chemical interactions or environmental influences. Physical instabilities/dissolution of the electrode, leading to clustering and formation of conductive oxides in ECM devices (middle). Chemical dissolution of the electrode and formation of insulating oxides. (Right) The figure is modified from [101].

Download figure:

Standard image High-resolution imageSeveral notable issues should be taken in consideration: (1) missing unequivocal experimental value about what part of the applied current is carried by ions and by electrons. Whereas in macroscopic systems these numbers are constant, in nanoscale ECMs they may vary depending on the conditions and charge concentration. (2) The charge/ion concentration may vary with time. Due to the small volume, it is easy to enrich or deplete the film with mobile ions (acting as donors/acceptors) during the operation cycles, resulting in deviation of the switching voltages and currents and finally to failures. (3) Again, due to the small volume, even a low number of foreign atoms/ions (impurities) will cause considerable changes in the electronic properties. Impurities or dopants as well as the matrix significantly alter the characteristics due to effects on the switching layer [102, 103] or on the electrodes [104]. (4) Effects of protons and oxygen; both can be incorporated either during device preparation (e.g. lithography steps, or deposition technique e.g. ALD etc) or from the environment [105], even if a capping layer is used. Many devices even cannot operate without the presence of protons and many electrode materials such as Cu, Mo, W or TiN etc can be partially or even are fully oxidized by environmental factors. (5) Interfacial interactions are commonly occurring at the electrode/solid electrolyte interface. The thickness of these interfacial layers can sometimes even exceed the thickness of the switching layer and inhibit or support reliable operation [101]. All these effects have their origin in the nanosize of the devices and highly non-equilibrium operating conditions.

4.3. Advances in science and technology to meet challenges

Addressing the challenges and issues that still limit the implementation of ECM devices in the praxis, should be considered on different levels. On a fundamental level, an in-depth understanding of the nanoscale processes and rate-limiting steps that determine the resistive switching mechanism is essential. To overcome the current limitations the theory should be further improved to account not only quantitatively but also qualitatively for the fundamental differences in thermodynamics and kinetics on the nanoscale compared to the macroscale. The scientific equipment needs to be improved to address the demand on sufficient mass and charge sensitivity as well as lateral and vertical resolution. Focus should be set on in situ and in operando techniques under real conditions enhanced by high time and imaging resolutions.

On a materials level, efforts should be made to understand and effectively use the relation between physical and chemical material properties, such as chemical composition, non-stoichiometry, purity, doping, density, thickness and mechanical properties and device performance and functionalities. A more narrow selection from the vast sea of ECM materials should be made on which systematic research should be performed. The final task to be achieved by a selective materials research approach is establishing a universal materials treasure map.

On a device/circuit/technology level, common problems such as the sneak path problem still need to be addressed. Limitation of interactions between devices and high-density integration (also within CMOS) needs to be further improved. The control during the deposition of layer materials should be adjusted to avoid layer intermixing, contaminations and incorporation of impurities. In many cases, deposition of thin films of non-oxidized elements or components with higher affinity to oxygen such as W, Mo, TiN or oxygen-free containing chalcogenides is possible only after special pre-care. The technological processes must be adapted and regularly controlled to ensure high quality and defined chemical composition. Additional efforts should also be made to integrate devices utilizing different functionalities and allowing for higher degree of complexity. The internal electromotive force should be further explored and utilized in respect to autonomous systems and applications in space technologies and medicine should also be further developed.

These issues are in fact highly interrelated and closely depend on each other. Most important on the current stage of development of ECM devices is to understand and control the relation between material properties, physical processes and device performance and functionalities. This knowledge will result in improved reliability of the devices and advanced technology.

4.4. Concluding remarks

ECM devices have been intensively developed in the last 20 years however, still not reaching their full potential. Opportunities for various applications in the fields of nanoelectronics, nanoionics, magnetics, optics, sensorics etc and the prospectsfor implementation as basic units in neuromorphic computing, big data processing, autonomous systems and AI are impeded by insufficient control of the nanoscale processes and incomplete knowledge on the relation between material properties, fundamental processes and devices characteristics and functionalities. To achieve these tasks, not only existing theory but also the scientific equipment and characterization techniques should be further improved allowing a direct insight in the complex nanoscale phenomena. Interacting and complementing fundamental and applied research is the key to address these issues in order to deploy the advantages and opportunities offered by the electrochemical metallization of cells into modern information and communications technologies.

5. Nanowire networks

Gianluca Milano1 and Carlo Ricciardi2

1Advanced Materials Metrology and Life Sciences Division, INRiM (Istituto Nazionale di Ricerca Metrologica), Torino, Italy

2Department of Applied Science and Technology, Politecnico di Torino, Torino, Italy

5.1. Status

The human brain is a complex network of about 1011 neurons connected by 1014 synapses, anatomically organized over multiple scales of space, and functionally interacting over multiple scales of time [106]. Synaptic plasticity, i.e. the ability of synaptic connections to strengthen or weaken over time depending on external stimulation, is at the root of information processing and memory capabilities of neuronal circuits. As building blocks for the realization of artificial neurons and synapses, memristive devices organized in large crossbar arrays with a top-down approach have been recently proposed [107]. Despite the state-of-art of this rapidly growing technology demonstrated hardware implementation of supervised and unsupervised learning paradigms in ANN, the rigid top-down and grid-like architecture of crossbar arrays fails in emulating the topology, connectivity and adaptability of biological neural networks, where the principle of self-organization governs both structure and functions [106]. Inspired by biological systems (figure 8(a)), more biologically plausible nanoarchitectures based on self-organized memristive nanowire (NW) networks have been proposed [16, 108–112] (figures 8(b) and (c)). Here, the main goal is to focus on the emergent behaviour of the system arising from complexity rather than on learning schemes that require addressing of single ANN elements. Indeed, in this case main players are not individual nano objects but their interactions [113]. In this framework, the cross-talk in between individual devices, that represents an unwanted source of sneak currents in conventional crossbar architectures, here represents an essential component for the network emerging behaviour needed for the implementation of unconventional computing paradigms. NW networks can be fabricated by randomly dispersing NWs with a metallic core and an insulating shell layer on a substrate by a low-cost drop casting technique that does not require nanolithography or cleanroom facilities. The obtained NW network topology shows small-world architecture similarly to biological systems [114]. Both single NW junctions and single NWs show memristive behaviour due to the formation/rupture of a metallic filament across the insulating shell layer and to breakdown events followed by electromigration effects in the formed nanogap, respectively (figures 8(e) and (h)) [16]. Emerging network-wide memristive dynamics were observed to arise from the mutual electrochemical interaction in between NWs, where the information is encoded in 'winnertakes-all' conductivity pathways that depend on the spatial location and temporal sequence of stimulation [115–117]. By exploiting these dynamics, NW networks in multiterminal configuration can exhibit homosynaptic, heterosynaptic and structural plasticity with spatiotemporal processing of input signals [16]. Also, nanonetworks have been reported to exhibit fingerprints of self-organized criticality similarly to our brain [108, 118, 119], a feature that is considered responsible for optimization of information transfer and processing in biological circuits. Because of both topological structure and functionalities, NW networks are considered as very promising platforms for hardware realization of biologically plausible intelligent systems.

Figure 8. Bio-inspired memristive NW networks. (a) Biological neural networks where synaptic connections between neurons are represented by bright fluorescent boutons (image of primary mouse hippocampal neurons); (b) self-organizing memristive Ag NW networks realized by drop-casting (scale bar, 500 nm). Adapted from [16] under the terms of Creative Commons Attribution 4.0 License, copyright 2020, Wiley-VCH. (c) Atomic switch network of Ag wires. Adapted from [112], copyright 2013, IOP Publishing. (d) and (e) Single NW junction device where the memristive mechanism rely on the formation/rupture of a metallic conductive filament in between metallic cores of intersecting NWs under the action of an applied electric field and (f) and (g) single NW device where the switching mechanism, after the formation of a nanogap along the NW due to an electrical breakdown, is related to the electromigration of metal ions across this gap. Adapted from [16] under the terms of Creative Commons Attribution 4.0 License, copyright 2020, Wiley-VCH.

Download figure:

Standard image High-resolution image5.2. Current and future challenges

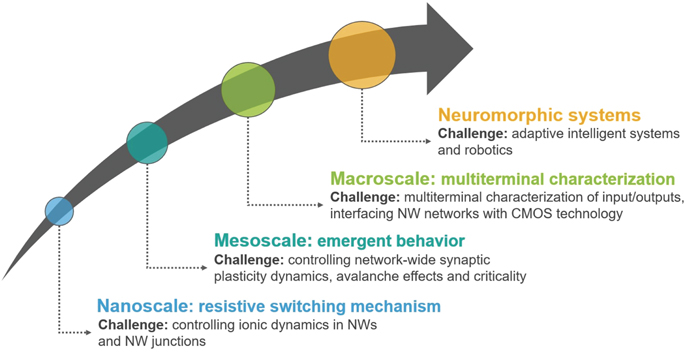

Current and future challenges for hardware implementation of neuromorphic computing in the bottom-up NW network will need integrated theoretical and experimental multidisciplinary approaches involving material physics, electronics engineering, neuroscience and network science (an overview of the roadmap is shown in figure 9). In NW networks, unconventional computing paradigms that emphasize the network as a whole rather than the role of single elements need to be developed. In this framework, great attention has recently been devoted to the reservoir computing (RC) paradigm where a complex network of nonlinear elements is exploited to map input signals into a higher dimensional feature space that is then analysed by means of a readout function. In this framework, nano-networks have been proposed [120] and experimentally exploited as 'physical' reservoirs for in materia implementation of the RC paradigm [121–123]. However, fundamental research is needed to address remaining challenges. The design and fabrication of multiterminal memristive NW networks able to process multiple spatio-temporal inputs with nonlinear dynamics, fading memory (short-term memory) and echo-state properties minimizing energy dissipation are needed. Importantly, these NW networks have to operate at low voltages and currents to be implemented with conventional electronics. These represent challenges from the material science point of view, since to achieve this goal NWs have to be optimized in terms of core–shell structures for tailoring ionic dynamics underlying resistive switching mechanism. Also, a fully-hardware RC system requires hardware implementation of the readout function for processing outputs of the NW network physical reservoir. Despite the neural network readout can be implemented by means of crossbar arrays of ReRAM devices to realize a fully-memristive architecture as demonstrated in reference [121], the software/hardware for interfacing the NW network with the ReRAM readout represents a challenge from the electronic engineering point of view. To fully investigate the computing capabilities of these self-organized systems, modelling of the emergent behaviour is required for understanding the interplay in between network topology and functionalities. This relationship can be explored with a complex network approach by means of graph theory metrics. Current challenges in understanding and modelling the emergent behaviour of NW networks rely on the experimental investigation of resistive switching mechanism in single network elements, including a statistical analysis of inherent stochastic switching features of individual memristive elements. Also, combined experiment and modelling are essential to investigate hallmarks of criticality including short and longrange correlations among network elements, power-law distributions of events and avalanche effects by means of an information theory approach. Despite scale-free networks operating near the critical point similarly to the cortical tissue are expected to enhance information processing, understanding how critical phenomena affect computational capabilities of self-organized NW networks still remain an open challenge.

Figure 9. Roadmap for the development of neuromorphic systems based on NW networks.

Download figure:

Standard image High-resolution image5.3. Advances in science and technology to meet challenges

Understanding dynamics from the nanoscale, at the single NW/NW junction level, to the macroscale where a collective behaviour emerges is a key requirement for implementing neuromorphic-type of data processing in NW networks. At the nanoscale, scanning probe microscopy techniques can be employed to assess local network dynamics. In particular, Conductive Atomic Force Microscopy (C-AFM), that provides information on the local NW network conductivity, can be exploited not only as a tool to investigate changes of conductivity after switching events, but also for locally manipulating the electrical connectivity at the single NW/NW junction level [124]. Scanning thermal microscopy can be employed to locally measure the network temperature with spatial resolution <10 nm, well below the resolution of the conventional lock-in thermography [116], providing information about nanoscale current pathways across the sample. At the macroscale, advances in electrical characterization techniques are required for analysing the spatial distribution of electrical properties across the network and their evolution over time upon stimulation. In this framework, one-probe electrical mapping can be adopted for spatially visualizing voltage equipotential lines across the network [125], even if this scanning technique does not allow an analysis of the network evolution over time. In contrast, non-scanning electrical resistance tomography (ERT) have been recently demonstrated as a versatile tool for mapping the network conductivity over time at the macroscale (∼cm2) [126]. Thus, ERT can allow in situ direct visualization of the formation and spontaneous relaxation of conductive pathways, providing quantitative information on the conductivity and morphology of conductive pathways in relation with the spatio-temporal location of stimulation. Advancements in the synthesis of core–shell NWs are required for engineering the insulating shell layer surrounding the metallic inner core that acts as a solid electrolyte. Taking into advantage of the possibility of producing conformal thin films with control of thickness and composition at the atomic level, atomic layer deposition (ALD) represents one of the most promising techniques for the realization of metal-oxide shell layers. Also, alternative bottom-up nanopatterning techniques such as direct self-assembly (DSA) of block copolymers (BCPs) can be explored for the fabrication of selforganizing NW networks with the possibility of controlling correlation lengths and degree of order [127]. This approach can allow a statistical control of network topology. Customized characterization techniques, from the nanoscale to the macroscale, coupled with a proper engineering of NW structure/materials and network topology, will ultimately enable the control of network dynamics needed for efficient computing implementations.

5.4. Concluding remarks

Self-organized NW networks can provide a new paradigm for the realization of neuromorphic hardware. The concept of nanoarchitecture, where the mutual interaction among a huge number of nano parts causes new functionalities to emerge, resembles our brain, where an emergent behaviour arises from the synaptic interactions among a huge number of neurons. Besides RC that represents one of the most promising computing paradigms to be implemented on these nanoarchitectures, unconventional computing frameworks able to process sensor inputs from the environment can be explored for online adapting of robot behavior. In perspective, more complex network dynamics can be explored by realizing computing nanoarchitectures composed of multiple interconnected networks or by stimulating networks with heterogeneous stimuli. In this scenario, NW networks that can learn and adapt when externally stimulated—thus mimicking the processes of experience-dependent synaptic plasticity that shapes connectivity of our nervous system—would not only represent a breakthrough platform for neuro-inspired computing but could also facilitate the understanding of information processing in our brain, where structure and functionalities are intrinsically related.

Acknowledgements

This work was supported by the European project MEMQuD, code 20FUN06. This project (EMPIR 20FUN06 MEMQuD) received funding from the EMPIR programme co-financed by the participating states and from the European Union's Horizon 2020 research and innovation programme.

6. 2D materials

Shi-Jun Liang1, Feng Miao1 and Mario Lanza2

1National Laboratory of Solid State Microstructures, School of Physics, Collaborative Innovation Center of Advanced Microstructures, Nanjing University, Nanjing, China

2Physical Sciences and Engineering Division, King Abdullah University of Science and Technology (KAUST), 23955-6900 Thuwal, Saudi Arabia

6.1. Status

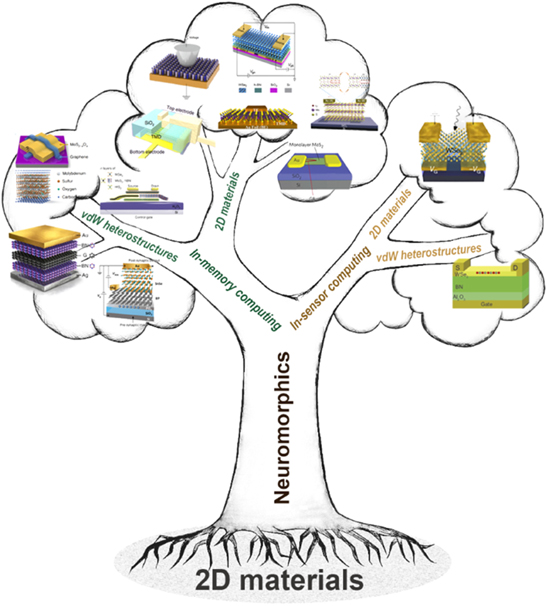

With more and more deployed edge devices, huge volumes of data are being generated each day and are waiting for real-time analysis. To process these raw data, these data have to be collected and stored, which are accomplished in sensors, memory unit and computing units, respectively. This usually gives rise to large delay and high energy consumption, which becomes severe with an explosive growth in data generation. Computing in sensory or memory devices allows for reducing latency and power consumption associated with data transfer [128] and is promising for real-time analysis. Functional diversity and performances of these two distinct computing paradigms are largely determined by the type of functional materials. Two dimensional (2D) materials represent a novel class of materials and show many promising properties, such as atomically thin geometry, excellent electronic properties, electrostatic doping, gate-tuneable photoresponse, superior thermal stability, exceptional mechanical flexibility and strength, etc. Stacking distinct 2D materials on top of each other enables creation of diverse van der Waals (vdW) heterostructures with different combinations and stacking orders, not only retaining the properties of dividual 2D components but also exhibiting additional intriguing properties beyond those of individual 2D materials.

2D materials and vdW heterostructures have recently shown great potential on achieving insensor computing and IMC, as shown in figure 10. There has intense interest in exploring unique properties of 2D materials and their vdW heterostructures for designing computational sensing devices. For example, photovoltaic properties of gate-tuneable p–n homojunction based on ambipolar material WSe2 were exploited for ultrafast vision sensor capable of processing images within 50 ns [129]. Employing gate-tuneable optoelectronic response of WSe2/h-BN vdW heterostructure can emulate the hierarchical architecture and biological functionalities of human retina to design reconfigurable retinomorphic sensor array [130].